User:Ankeshanand/GSoC14/proposal

From BRL-CAD

Contents

Personal Information

| Name | Ankesh Anand |

| Email Address | ankeshanand1994@gmail.com |

| IRC(nick) | ankesh11 |

| Phone Number | +91 8348522098 |

| Time Zone | UTC +0530 |

Brief Background

Project Information

Project Title

Benchmark Performance Database

Abstract

BRL-CAD Benchmark Suite has been used over the years to analyse and compare a system's performance based on certain parameters. The goal of this project is to deploy a database and visualization website that provides multiple mechanisms to add new benchmark logs into the database and provide various forms of visualizations for the aggregate data.

Detailed Project Description

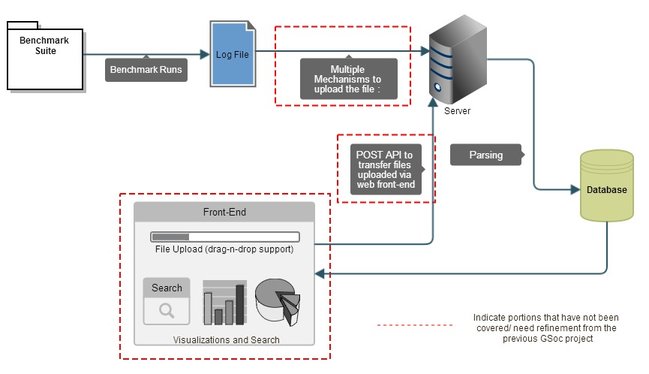

A Diagrammatic Overview

- Benchmark Runs produce a log-file which contains the results of the ray-tracing program on a bunch of sample databases, along with a linear performance metric, system state information and system CPU information. These log-files then need to be uploaded to a server via multiple mechanisms such as Email(Mail server), FTP, scp and an http API.There a parser reads the information from the log-file and subsequently stores it into a database. The database schema is ideally designed to index all the information in the log-file. The front-end pulls the aggregate data from the database and displays it in forms of various plots and tables. There is also a file upload mechanism via the web front-end as well as a search functionality which enables searches filtered to parameters such as machine descriptions, versions, results etc.

The current status of the Project

- There was an earlier GSoC project[1] which did a nice job of building a robust parser and capturing all the relevant information into the database. I plan to leverage the parser and database modules. The front-end is buily using the Python Bottle Framework and it Google Charts Library which is not robust for data-visualizations. I feel I could be more productive if I start my implementation of front-end from scratch, the details of which are highlighted in further sections.

Mechanisms for Upload

- Emails

- The current de-facto standard to submit the benchmark results is to send an email to benchmark@brlcad.org. Any approach to build a benchmark performance database must cater and support parsing of emails to retrieve the attached logs. Keeping this in mind, I have decided to use the php-mime-mail-parser[1] library, which is a wrapper around PHP's Mail Parser extension to parse and extract attachments from the mails. It's fast and has an easy implementation:

// require mime parser library

require_once('MimeMailParser.class.php');

// instantiate the mime parser

$Parser = new MimeMailParser();

// set the email text for parsing

$Parser->setText($text);

// get attachments

$attachments = $Parser->getAttachments();

- Drag-n-Drop Files

- The front-end will provide a convenient interface to upload log-files with a drag-n-drop support. An uploader script based on HTTP POST will handle the implementation details, and the drag and drop feature can be realized using the DropzoneJS[2] library. This also means the file can be sent with a command line tool such as curl.

- FTP

- The files can also be uploaded to the server via a FTP client. A cron-job keeps checking for new files on the server at a regular frequency(say 5mins), and then subsequently sends them for parsing.

Data-Visualizations

- Framework

- I went through a couple of tools to decide what could be best for the project, and in my opinion d3.js is probably the best library out there for creating dynamic and interactive data-visualizations in the browser. In contrast to many other libraries, D3 allows great control over the final visual result. It's very versatile and gives us wide options in terms of graphical visualizations. I have played around with d3.js in my past projects, so that should come in handy.

- Types of Analytics

- (This probably needs some discussion with the community. I have highlighted some of the primitive ideas.)

- Average Performance of Different Architectures against Reference Images (A Grouped Bar Chart)

- A comparison of different architectures with respect to VGR Metric.

- Historical Process in Performance across different Processor Families.

- Performance against Number of CPUs.

- Categorization

- A number of categorizations are possible for the final analytics. Some examples include categorization by the Processor Family, categorization by the type of machine(Desktop, workstation, servers) etc.

Search Functionality

- One of the goals of the project is to let the users perform wide range of queries on the data. The storage in the database enables the content to be searched via parameters such as processor family, number of CPUs, and other machine descriptions. The results could be presented in a tabular form.

Deployment

Deliverables

- Implement mechanisms to add new performance data into the database.

- Multiple forms of visualizations for the aggregate data.

- A search functionality to enable users to perform queries over the data.

- Integrate the parser and database modules from the previous GSoc project.

- Detailed Documentation for the project.

- A deploy-able Database and Visualization website for the BRL-CAD Benchmark Suite.